Have you ever trusted a friend with your personal belongings, and they have abused them, either accidentally or on purpose? Then you'll understand why it's important that Apple® goes to great lengths to ensure that no private information about users of Apple devices can be collected. In today's digital landscape, data privacy has become a paramount concern for individuals and organizations alike. As data breaches and privacy violations continue to make headlines, safeguarding sensitive information has become a top priority. Apple, a global technology leader, has taken significant strides in this area with its innovative approach called Differential Privacy.

Differential Privacy, a unique privacy-first way of machine learning

Data collection for the purpose of improving machine learning is far from new. Apple’s competitors, especially Google, have also implemented a lot of methods to collect user data, which helps personalize their services and apps, however, this often comes at the cost of data protection. There is a very narrow line between user data collection for improving one’s services and the potential risk of this personal data being used for dangerous purposes. If we take nowadays discussions about personalized ads for example, it is clearly becoming more of a threat to technology and mostly smartphone users than something helpful that makes their browsing more convenient. There were many different approaches to find the silver lining in data protection while still developing machine learning by user data and Apple’s Differential Privacy is certainly a well-designed one.

Differential Privacy has a complex method structure, which is based on the thought of instead of collecting anonymized mass data, Apple’s technique has three key elements that provides more effective and meaningful results to the organization while still protecting the users’ privacy. First, it uses the hashing method which scrambles user data to provide efficient information and statistically relevant results without storing any original information.

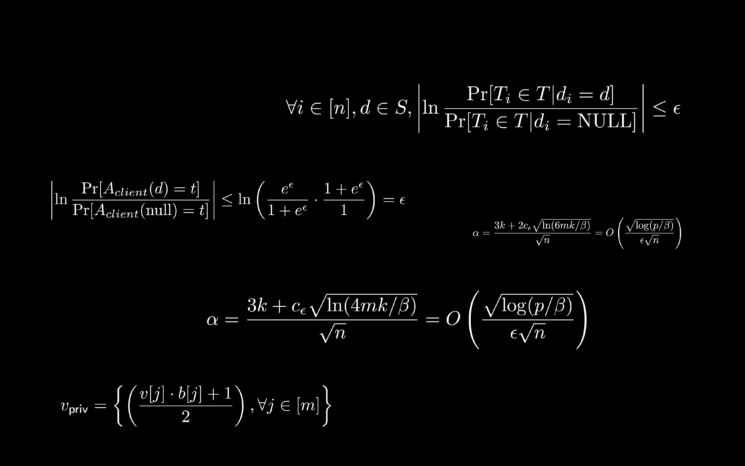

Hashing encrypts the data into a much shorter value, which distinguishes it from the original value. The other method is sub-sampling, which derives useful insights from the data stored on the device, thus the private data never leaves the host device. The third method is noise injection that adds unrelated data to the original mass, thus protecting the private information by randomness. There is a silver lining here, too much noise would heavily mask the result, therefore making the derived information questionable. The noise injection distortion can be recalculated to give a statistically useful result without knowing what the original private data were. The mathematical formula for noise injection is shown in the following figure:

source: iDB

Moreover, instead of sending the entire matrix to Apple, only certain rows of the generated matrix are selected for the data evaluation in order to preserve user privacy and stay in the privacy budget. This is the so-called Count Mean Sketch. Another, even more secure method is the Hadamard Mean Sketch, that only sends a bit of data instead of one row of the matrix.

So, in other words, if we allow the Apple device to send feedback and diagnostic information to Apple or AppStore® app developers, the information is modified before it is sent by the Apple device so that it remains useful feedback for developers but does not reveal the user's personal habits and information.

Of course, if someone does not want to provide any data to Apple, the user can turn off data collection completely at any time. But this way Apple and the app developers do not get any feedback to improve the functionality. Differential Privacy is precisely designed so that the user does not have to worry about allowing feedback to Apple.

Where and how does Apple use Differential Privacy?

Apple introduced this method to improve the suggestion system and intelligence of several apps, such as QuickType®, emoji suggestions, Safari®, and Health Type. These apps measure data through the procedure, removing private information from the results. When it arrives on Apple's servers, the mass data of feedback is visible without the exact client data. But even the data that has been depersonalized and aggregated in this way can only be accessed by the department responsible.

The method aggregates data from these sources to improve suggestions and even get feedback on certain developments, like how accurately can Health Type measure user data by focusing on the amount of user edited data in the app and not on the actual content of personal information. In Safari it helps to determine which deep links are popular or often visited without revealing user identity.

What about enterprises?

This is an efficient way of improving Apple’s products and services without threatening users. It might seem less groundbreaking at the first glance as many would think that this topic concerns regular Apple users rather than corporations but that’s false. Many companies and enterprises decide on giving their employees Apple workstations or allow a BYOD construction for various reasons we already explored in our previous articles , and the most crucial argument in favor of Apple at work is the outstanding security and data protection of these devices. Employees store a plethora of sensitive data on their smartphones and computers; however, most people use their work devices for less professional purposes as well.

A mechanic may receive automatized technical alerts and messages on their Apple Watch, but the same watch measures their health data as well. A salesperson uses their iPhone or MacBook on the road for professional purposes but might search up personal content on Safari or send messages to friends and colleagues while on the road. The list could go on, and it is the reality of a modern work environment, which requires a heavy and conscientious data protection approach, otherwise confidential company information could be leaked together with the employee’s personal data as well. But improving the features used in the enterprise environment by providing feedback is an essential aim for organizations.

However, as the introduction of Differential Privacy has been years ago, the need for improved machine learning, together with increased and robust security measures is increasing at a great pace, these well-constructed methods could support the future of privacy protection while still focus on improving machine learning, user experience and increasing efficiency.